Generative AI legal mistakes are becoming a pressing concern as more attorneys integrate artificial intelligence tools into their legal practices. Recently, a notable incident involved attorneys who submitted legal documents referencing entirely fictitious cases, raising alarms about the reliability of AI-generated content in law. This event not only highlights the phenomenon of AI hallucination but also underlines the potential for legal document errors that can jeopardize court cases. As AI in law continues to evolve, the implications of such missteps could lead to severe repercussions for both legal professionals and their clients. With the increasing attorney AI usage, it is essential to establish robust standards and guidelines to mitigate these risks and ensure the integrity of legal proceedings.

The rise of artificial intelligence in the legal sector has ushered in a new era of efficiency and innovation; however, it has also brought to light significant challenges, particularly related to inaccuracies in AI-generated outputs. In recent years, the phenomenon of AI hallucination has surfaced, where AI systems produce erroneous information, leading to legal document errors that can severely impact court cases. With the integration of AI tools, attorneys are now navigating a complex landscape where the reliability of generated content must be scrutinized. This scenario calls for a reevaluation of attorney AI usage, emphasizing the need for critical oversight in legal practices. As AI continues to permeate the legal field, understanding these intricacies will be crucial for safeguarding justice and maintaining the credibility of legal processes.

The Risks of Generative AI in Legal Practice

The increasing integration of generative AI into legal practice poses significant risks for attorneys. A recent incident highlighted this danger when attorneys submitted legal documents referencing non-existent cases, leading to serious consequences in a product liability lawsuit. This situation serves as a stark reminder that reliance on AI without critical oversight can result in severe legal ramifications, including potential sanctions from the court. As AI tools become more prevalent in the legal field, it is essential for attorneys to remain vigilant and put in place safeguards that prevent such mistakes from occurring.

Moreover, the phenomenon of AI hallucination—where generative AI fabricates information—can undermine the integrity of legal documents. A notable case involved the erroneous citation of cases like Meyer v. City of Cheyenne, which did not exist. This not only jeopardizes the case at hand but also tarnishes the reputation of the attorneys involved. Legal professionals must recognize that while AI can enhance efficiency, it should never replace the critical thinking and thorough research that are foundational to effective legal practice.

Understanding AI Hallucinations and Their Consequences

AI hallucinations refer to instances when artificial intelligence systems generate false information or fabricate details, which can have dire consequences in legal contexts. In the case of the attorneys who submitted fictitious case references, the reliance on an AI tool led to misstatements that could have resulted in sanctions. As the legal profession increasingly adopts AI tools, understanding the implications of these hallucinations becomes crucial. Legal professionals must be aware of the limits of generative AI and the potential for significant errors that can adversely affect their cases.

Additionally, the consequences of relying on inaccurate AI-generated information extend beyond the courtroom. They can lead to a loss of credibility within the legal community and among clients. Legal practitioners must take proactive steps to ensure that all AI-generated outputs are thoroughly vetted and verified. This includes implementing training sessions on the limitations of AI in legal research and drafting, as well as establishing protocols for cross-checking AI-generated information against established legal sources.

The Role of AI in Modern Legal Workflows

The introduction of AI in law has transformed the way legal professionals approach their work. AI tools can help streamline processes such as document review, legal research, and case management, allowing attorneys to focus more on strategic aspects of their practice. However, the recent incident involving fabricated legal cases highlights the need for caution. While AI can enhance productivity, it is imperative for attorneys to maintain a level of scrutiny over the outputs generated by these tools to avoid potential pitfalls.

Furthermore, as AI continues to evolve, its role in the legal field will likely expand, necessitating a shift in how attorneys are trained. Legal education programs must adapt to incorporate training on the effective use of AI technologies, ensuring that future attorneys are equipped to leverage these tools responsibly. This includes understanding the potential for legal document errors and how to mitigate them through rigorous checks and balances.

Mitigating Legal Document Errors with AI

To mitigate the risk of legal document errors when using AI, law firms must establish strict guidelines for the implementation of generative AI tools. This includes ensuring that all AI-generated documents undergo a thorough review process by experienced attorneys before submission to the court. By fostering a culture of accountability and diligence, law firms can significantly reduce the chances of AI hallucinations impacting their legal work.

Additionally, the adoption of training programs that emphasize the importance of verifying AI outputs can help attorneys develop a discerning eye for quality control. This proactive approach is essential, especially in high-stakes situations where the accuracy of legal documents can determine the outcome of a case. By prioritizing the verification of AI-generated data, legal professionals can uphold the standards of their practice and safeguard against the repercussions of generative AI legal mistakes.

Court Cases and AI: A Complex Relationship

The relationship between court cases and the application of AI technology is complex and fraught with challenges. The use of AI in legal proceedings has the potential to streamline processes and improve efficiency; however, it also raises questions about the reliability and accuracy of the information generated. The recent example of attorneys citing non-existent cases illustrates the precarious balance that must be maintained when integrating AI into legal workflows.

As courts increasingly encounter AI-generated documents, there is a growing need for legal standards that govern the use of such technology. Judges and legal practitioners must work together to establish guidelines that address the nuances of AI in law, ensuring that the adoption of these tools enhances rather than undermines the judicial process. It is critical for the legal community to stay ahead of these developments to maintain the integrity of the legal system.

Attorney AI Usage: Best Practices

As attorneys begin to incorporate AI tools into their practices, it is essential to develop best practices that prioritize accuracy and ethical standards. This involves creating a framework for how AI tools are utilized in legal research and document drafting. Best practices should include regular training on the limitations of AI and the importance of human oversight in the legal process.

Moreover, law firms should consider establishing internal policies that require attorneys to explicitly verify AI-generated information against credible legal sources. This could involve maintaining a checklist that prompts attorneys to cross-check citations, confirm case details, and ensure that all legal arguments are grounded in factual accuracy. By creating a robust system of checks and balances, attorneys can leverage AI’s benefits while minimizing the risk of errors.

The Importance of Human Oversight in AI Applications

Despite the advantages that AI brings to the legal profession, human oversight remains paramount. The reliance on AI-generated outputs without critical evaluation can lead to serious legal implications, as evidenced by the recent case involving fictitious citations. Attorneys must recognize that while AI can assist with research and drafting, it should never replace their expertise and judgment.

Establishing a culture that values human oversight in AI applications can help mitigate the risks of generative AI legal mistakes. This includes encouraging legal teams to collaborate closely when leveraging AI tools and fostering an environment where questioning and critical thinking are integral parts of the process. By prioritizing human involvement, law firms can ensure that the outputs of AI align with the standards of legal practice.

Ethical Considerations for AI in Law

As the legal profession embraces AI, ethical considerations must be at the forefront of discussions regarding its implementation. The potential for AI to generate misleading or fabricated information raises significant ethical concerns about the integrity of legal practice. Attorneys have an obligation to ensure that their submissions to the court are accurate and trustworthy, and reliance on flawed AI outputs undermines this responsibility.

Legal professionals must engage in ongoing conversations about the ethical implications of AI usage, including the need for transparency in how AI-generated information is utilized. By establishing ethical guidelines that govern the use of AI in legal contexts, the profession can promote accountability and protect the interests of clients and the judicial system alike. This proactive approach will be essential as AI technology continues to evolve.

Future Trends in AI and Law

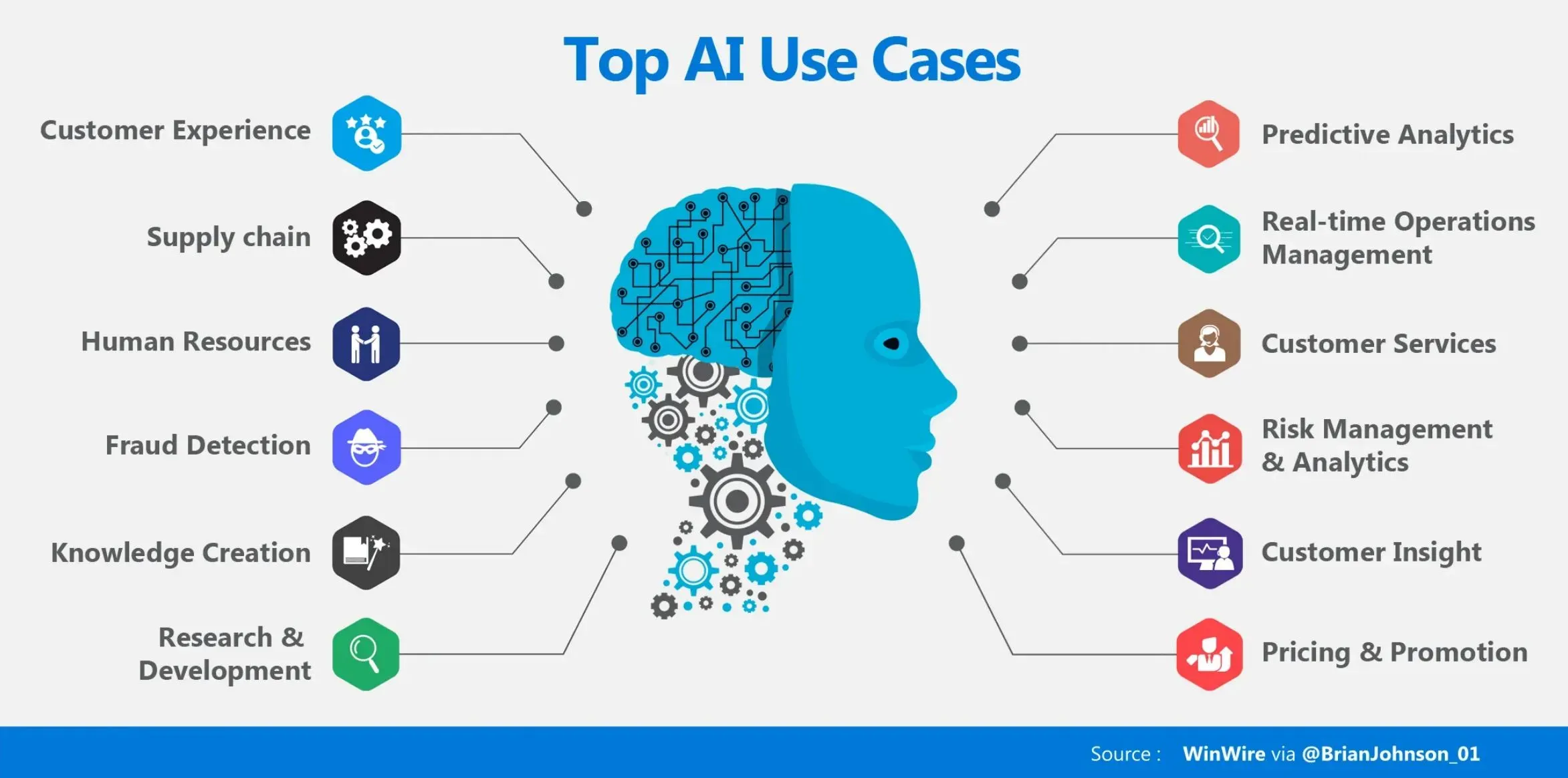

The future of AI in law is poised for rapid development, with emerging technologies promising to transform the legal landscape. As AI tools become more sophisticated, they are likely to enhance various aspects of legal practice, from predictive analytics in case outcomes to automating routine tasks. However, it is crucial for legal professionals to remain vigilant about the potential risks associated with these advancements, particularly the prevalence of AI hallucination and its impact on legal accuracy.

To navigate these future trends successfully, attorneys will need to adopt a mindset of continuous learning and adaptation. This involves staying informed about the latest developments in AI technology, participating in training sessions, and collaborating with AI developers to ensure that tools are designed with the unique needs of the legal profession in mind. By embracing innovation while prioritizing ethical considerations and accuracy, the legal field can harness the full potential of AI.

Frequently Asked Questions

What are generative AI legal mistakes and how do they occur?

Generative AI legal mistakes refer to errors that arise when AI systems, like ChatGPT, produce inaccurate or fabricated legal information, such as citations to non-existent cases. These mistakes can occur due to AI hallucination, where the model generates plausible but false outputs, leading attorneys to mistakenly rely on these inaccuracies in legal documents.

How can AI hallucination lead to legal document errors in court cases?

AI hallucination can lead to legal document errors when attorneys use generative AI to draft filings or motions without verifying the information. This reliance can result in the inclusion of fictitious case citations, which can undermine the credibility of legal arguments and potentially result in sanctions from the court.

What are the implications of legal document errors caused by AI in law?

Legal document errors caused by AI in law can have severe implications, including reputational damage for law firms, potential sanctions from judges, and adverse outcomes in court cases. Such errors highlight the importance of diligent verification and the limitations of AI assistance in legal practice.

How should attorneys address generative AI legal mistakes in their practice?

Attorneys should implement strict verification processes when using generative AI tools, ensuring that all legal citations and documents are thoroughly checked for accuracy. Additionally, firms should provide training on the limitations of AI in law and consider adding disclaimers or acknowledgment features to their AI platforms.

What lessons can be learned from recent cases involving AI in law?

Recent cases involving AI in law emphasize the need for attorneys to critically evaluate AI-generated outputs. They serve as cautionary tales that highlight the risks of unverified reliance on technology, suggesting that firms should foster a culture of careful oversight when integrating AI tools into legal workflows.

Can generative AI improve the efficiency of legal practices despite the risks of mistakes?

Yes, generative AI can improve the efficiency of legal practices by automating routine tasks and assisting in legal research. However, it is crucial to balance efficiency with accuracy by implementing safeguards and maintaining human oversight to minimize the risks of generative AI legal mistakes.

What steps can law firms take to prevent generative AI legal mistakes from happening?

Law firms can prevent generative AI legal mistakes by establishing clear protocols for AI usage, providing training on the limitations of AI, incorporating verification steps for AI-generated content, and utilizing tools that require attorneys to acknowledge their responsibility for the outputs produced by AI.

How does the use of attorney AI tools impact the legal profession?

The use of attorney AI tools impacts the legal profession by enhancing productivity and providing new ways to access information. However, it also raises concerns about the accuracy of AI outputs, necessitating a cautious approach to ensure that legal standards and ethical responsibilities are upheld.

What are the potential consequences of submitting false information generated by AI in legal filings?

The potential consequences of submitting false information generated by AI in legal filings can include court-imposed sanctions, damage to the attorney’s reputation, loss of credibility with clients and peers, and even legal repercussions for malpractice if it leads to adverse outcomes in cases.

How can attorneys ensure responsible use of AI in law?

Attorneys can ensure responsible use of AI in law by staying informed about the technology’s capabilities and limitations, establishing ethical guidelines for AI usage, conducting regular audits of AI outputs, and fostering a culture of accountability within their firms.

| Key Point | Details |

|---|---|

| Incident Overview | Attorneys submitted documents in a product liability lawsuit referencing non-existent legal cases. |

| Involved Parties | Plaintiffs filed a complaint against Walmart and Jetson Electric Bikes over a fire allegedly caused by a hoverboard. |

| Legal Proceedings | Judge Kelly Rankin issued an order to show cause regarding the use of fabricated cases. |

| Errors Acknowledged | Attorneys acknowledged that the citations included in their motion were fabricated or incorrect. |

| AI Misuse | The attorneys used OpenAI’s ChatGPT for drafting legal documents which resulted in ‘hallucinated’ case references. |

| Repercussions | The involved attorneys face potential sanctions for their submissions, highlighting the risks of using AI in legal contexts. |

| Firm Response | Morgan & Morgan added features to their AI platform to remind users of AI’s limitations. |

| Lessons Learned | The incident serves as a cautionary tale about the reliance on AI tools for legal work. |

Summary

Generative AI legal mistakes have become a significant concern in the legal community, as demonstrated by a recent incident involving attorneys who submitted documents referencing non-existent cases. This incident highlights the critical need for legal professionals to exercise caution and verify the information generated by AI tools. As the use of generative AI continues to grow in the legal field, understanding its limitations and potential pitfalls is essential to avoid similar errors in the future.